It’s been almost 1 year since I’ve adopted Apache Airflow to my company. This post would be a quick lookback for this decision.

Although the Airflow is in action, it has been only used as an alternative to the cron daemon. One of the biggest reasons was that every team members were busy to handling their other tasks. But also the fact that the Airflow has a high learning curve was an another reason. So I didn’t push team members to use the Airflow, but encouraged to move the cronjobs one by one when they have chances.

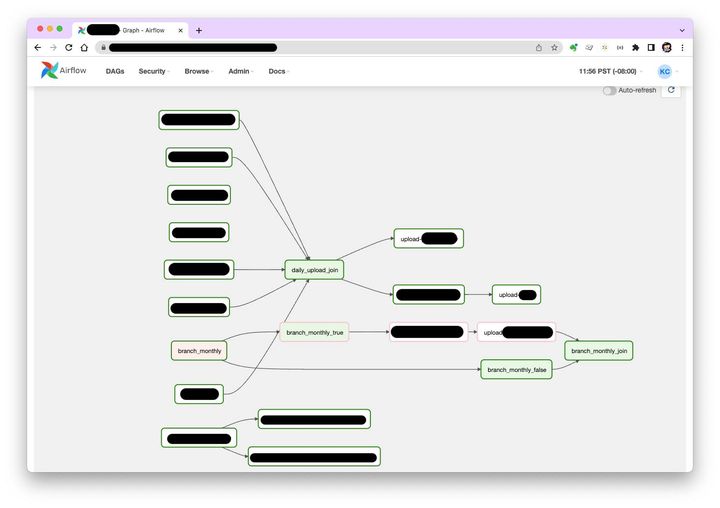

Just replacing cron benefited us from many aspects: Centralized management of various batch jobs, a dashboard which shows the running status of the scheduled tasks, and alarms for job failures. There are also cons that the failure of the Airflow result in the failure of all the batch jobs. However, it is so clear that all the other advantages go beyond this disadvantage, all the team members were moving their batch jobs to the Airflow one by one. Now we have over 30 DAGs in the Airflow.

Rules and tools have been made:

- Cronjobs for the host management like loglotate remains in crontab. Batch jobs that needed central management such as data backup and ETL go to the Airflow.

- DAGs are kept in a github repository. When a DAG is pushed to the master branch of the repository, it will be deployed automatically to the Airflow.

- Local time is used instead of the UTC. Because California is still adopting daylight saving time.

- When DAGs are failed, alarms will be sent by messenger. We have had to make our own python module for this integration.

One of the most important reason which I’ve adopted the Airflow was controlling the dependencies between batch jobs. Until now I didn’t push this objective to my team, but I think now I can.